[ad_1]

One of many necessary points that has been introduced up over the course of the Olympic stress-net launch is the massive quantity of information that shoppers are required to retailer; over little greater than three months of operation, and notably over the last month, the quantity of information in every Ethereum consumer’s blockchain folder has ballooned to a powerful 10-40 gigabytes, relying on which consumer you’re utilizing and whether or not or not compression is enabled. Though you will need to notice that that is certainly a stress check situation the place customers are incentivized to dump transactions on the blockchain paying solely the free test-ether as a transaction charge, and transaction throughput ranges are thus a number of occasions larger than Bitcoin, it’s nonetheless a legit concern for customers, who in lots of instances should not have a whole lot of gigabytes to spare on storing different individuals’s transaction histories.

To start with, allow us to start by exploring why the present Ethereum consumer database is so giant. Ethereum, in contrast to Bitcoin, has the property that each block accommodates one thing known as the “state root”: the foundation hash of a specialized kind of Merkle tree which shops the complete state of the system: all account balances, contract storage, contract code and account nonces are inside.

The aim of that is easy: it permits a node given solely the final block, along with some assurance that the final block truly is the latest block, to “synchronize” with the blockchain extraordinarily rapidly with out processing any historic transactions, by merely downloading the remainder of the tree from nodes within the community (the proposed HashLookup wire protocol message will faciliate this), verifying that the tree is appropriate by checking that all the hashes match up, after which continuing from there. In a totally decentralized context, this may possible be carried out by a complicated model of Bitcoin’s headers-first-verification technique, which can look roughly as follows:

- Obtain as many block headers because the consumer can get its arms on.

- Decide the header which is on the tip of the longest chain. Ranging from that header, return 100 blocks for security, and name the block at that place P100(H) (“the hundredth-generation grandparent of the pinnacle”)

- Obtain the state tree from the state root of P100(H), utilizing the HashLookup opcode (notice that after the primary one or two rounds, this may be parallelized amongst as many friends as desired). Confirm that each one components of the tree match up.

- Proceed usually from there.

For gentle shoppers, the state root is much more advantageous: they’ll instantly decide the precise steadiness and standing of any account by merely asking the community for a selected department of the tree, without having to observe Bitcoin’s multi-step 1-of-N “ask for all transaction outputs, then ask for all transactions spending these outputs, and take the rest” light-client mannequin.

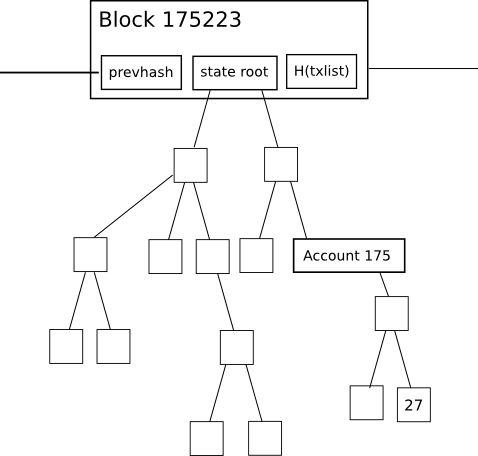

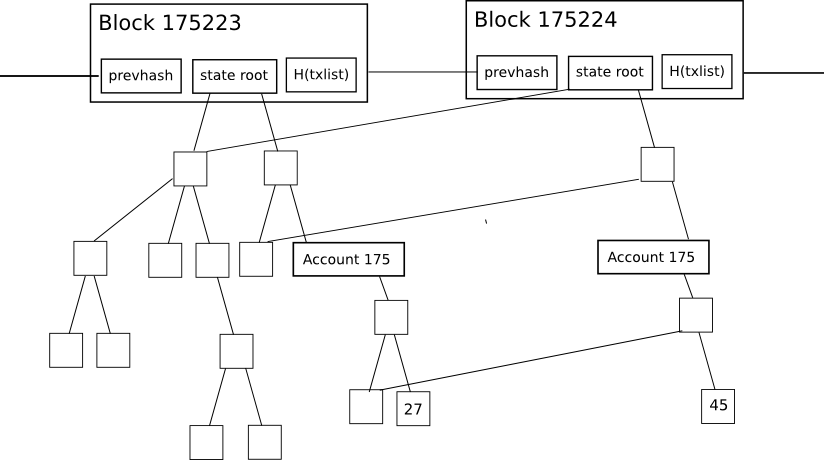

Nevertheless, this state tree mechanism has an necessary drawback if applied naively: the intermediate nodes within the tree significantly improve the quantity of disk house required to retailer all the info. To see why, take into account this diagram right here:

The change within the tree throughout every particular person block is pretty small, and the magic of the tree as a knowledge construction is that many of the knowledge can merely be referenced twice with out being copied. Nevertheless, even nonetheless, for each change to the state that’s made, a logarithmically giant variety of nodes (ie. ~5 at 1000 nodes, ~10 at 1000000 nodes, ~15 at 1000000000 nodes) have to be saved twice, one model for the outdated tree and one model for the brand new trie. Finally, as a node processes each block, we are able to thus anticipate the entire disk house utilization to be, in pc science phrases, roughly O(n*log(n)), the place n is the transaction load. In sensible phrases, the Ethereum blockchain is just one.3 gigabytes, however the dimension of the database together with all these additional nodes is 10-40 gigabytes.

So, what can we do? One backward-looking repair is to easily go forward and implement headers-first syncing, primarily resetting new customers’ onerous disk consumption to zero, and permitting customers to maintain their onerous disk consumption low by re-syncing each one or two months, however that may be a considerably ugly resolution. The choice method is to implement state tree pruning: primarily, use reference counting to trace when nodes within the tree (right here utilizing “node” within the computer-science time period that means “piece of information that’s someplace in a graph or tree construction”, not “pc on the community”) drop out of the tree, and at that time put them on “loss of life row”: except the node by some means turns into used once more inside the subsequent X blocks (eg. X = 5000), after that variety of blocks go the node ought to be completely deleted from the database. Primarily, we retailer the tree nodes which might be half of the present state, and we even retailer current historical past, however we don’t retailer historical past older than 5000 blocks.

X ought to be set as little as doable to preserve house, however setting X too low compromises robustness: as soon as this method is applied, a node can not revert again greater than X blocks with out primarily utterly restarting synchronization. Now, let’s examine how this method may be applied totally, making an allowance for all the nook instances:

- When processing a block with quantity N, hold monitor of all nodes (within the state, tree and receipt bushes) whose reference rely drops to zero. Place the hashes of those nodes right into a “loss of life row” database in some type of knowledge construction in order that the listing can later be recalled by block quantity (particularly, block quantity N + X), and mark the node database entry itself as being deletion-worthy at block N + X.

- If a node that’s on loss of life row will get re-instated (a sensible instance of that is account A buying some specific steadiness/nonce/code/storage mixture f, then switching to a special worth g, after which account B buying state f whereas the node for f is on loss of life row), then improve its reference rely again to at least one. If that node is deleted once more at some future block M (with M > N), then put it again on the longer term block’s loss of life row to be deleted at block M + X.

- Whenever you get to processing block N + X, recall the listing of hashes that you just logged again throughout block N. Examine the node related to every hash; if the node remains to be marked for deletion throughout that particular block (ie. not reinstated, and importantly not reinstated after which re-marked for deletion later), delete it. Delete the listing of hashes within the loss of life row database as nicely.

- Typically, the brand new head of a series won’t be on prime of the earlier head and you’ll need to revert a block. For these instances, you’ll need to maintain within the database a journal of all modifications to reference counts (that is “journal” as in journaling file systems; primarily an ordered listing of the modifications made); when reverting a block, delete the loss of life row listing generated when producing that block, and undo the modifications made based on the journal (and delete the journal whenever you’re carried out).

- When processing a block, delete the journal at block N – X; you aren’t able to reverting greater than X blocks anyway, so the journal is superfluous (and, if saved, would in actual fact defeat the entire level of pruning).

As soon as that is carried out, the database ought to solely be storing state nodes related to the final X blocks, so you’ll nonetheless have all the data you want from these blocks however nothing extra. On prime of this, there are additional optimizations. Significantly, after X blocks, transaction and receipt bushes ought to be deleted solely, and even blocks could arguably be deleted as nicely – though there is a vital argument for conserving some subset of “archive nodes” that retailer completely all the things in order to assist the remainder of the community purchase the info that it wants.

Now, how a lot financial savings can this give us? Because it seems, quite a bit! Significantly, if we had been to take the last word daredevil route and go X = 0 (ie. lose completely all capacity to deal with even single-block forks, storing no historical past by any means), then the scale of the database would primarily be the scale of the state: a worth which, even now (this knowledge was grabbed at block 670000) stands at roughly 40 megabytes – the vast majority of which is made up of accounts like this one with storage slots stuffed to intentionally spam the community. At X = 100000, we’d get primarily the present dimension of 10-40 gigabytes, as many of the progress occurred within the final hundred thousand blocks, and the additional house required for storing journals and loss of life row lists would make up the remainder of the distinction. At each worth in between, we are able to anticipate the disk house progress to be linear (ie. X = 10000 would take us about ninety p.c of the best way there to near-zero).

Word that we could need to pursue a hybrid technique: conserving each block however not each state tree node; on this case, we would want so as to add roughly 1.4 gigabytes to retailer the block knowledge. It is necessary to notice that the reason for the blockchain dimension is NOT quick block occasions; presently, the block headers of the final three months make up roughly 300 megabytes, and the remainder is transactions of the final one month, so at excessive ranges of utilization we are able to anticipate to proceed to see transactions dominate. That stated, gentle shoppers may even must prune block headers if they’re to outlive in low-memory circumstances.

The technique described above has been applied in a really early alpha kind in pyeth; will probably be applied correctly in all shoppers in due time after Frontier launches, as such storage bloat is simply a medium-term and never a short-term scalability concern.

[ad_2]